Waves Vienna Music Hackday 2016

The Waves Music Hackday is a one-day hackathon which takes place during the Waves Festival, a club and showcase festival with the motto “East meets West”. Many concerts and conferences happened at and around the WUK, a cultural center in the Wärhinger area.

The Waves Music Hackday took place for the very first time in 2015 and was a full success! It gathers people around music & technology, with very diverse backgrounds: whether you are a musician, artist, hacker, developer, thinker or hobbyist creator — join this hack day and meet other creative people to spin your ideas around the future of music & technology!

I had the chance to participate in the 2016 edition. Although I knew that music creation and hardware tools were common topics, I had no idea what to create during this day before it actually started. Hopefully, on Friday night, companies presented tools available to help and boost hackers towards fun, surprising and innovative creations.

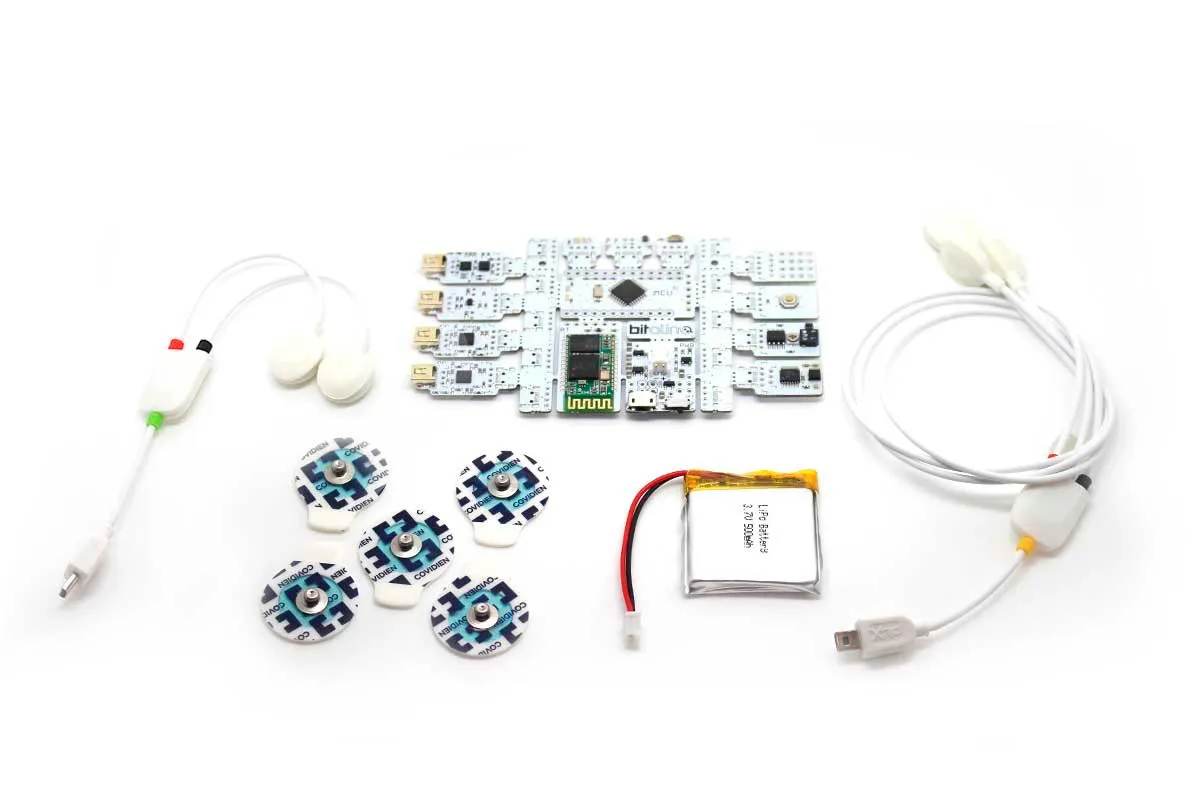

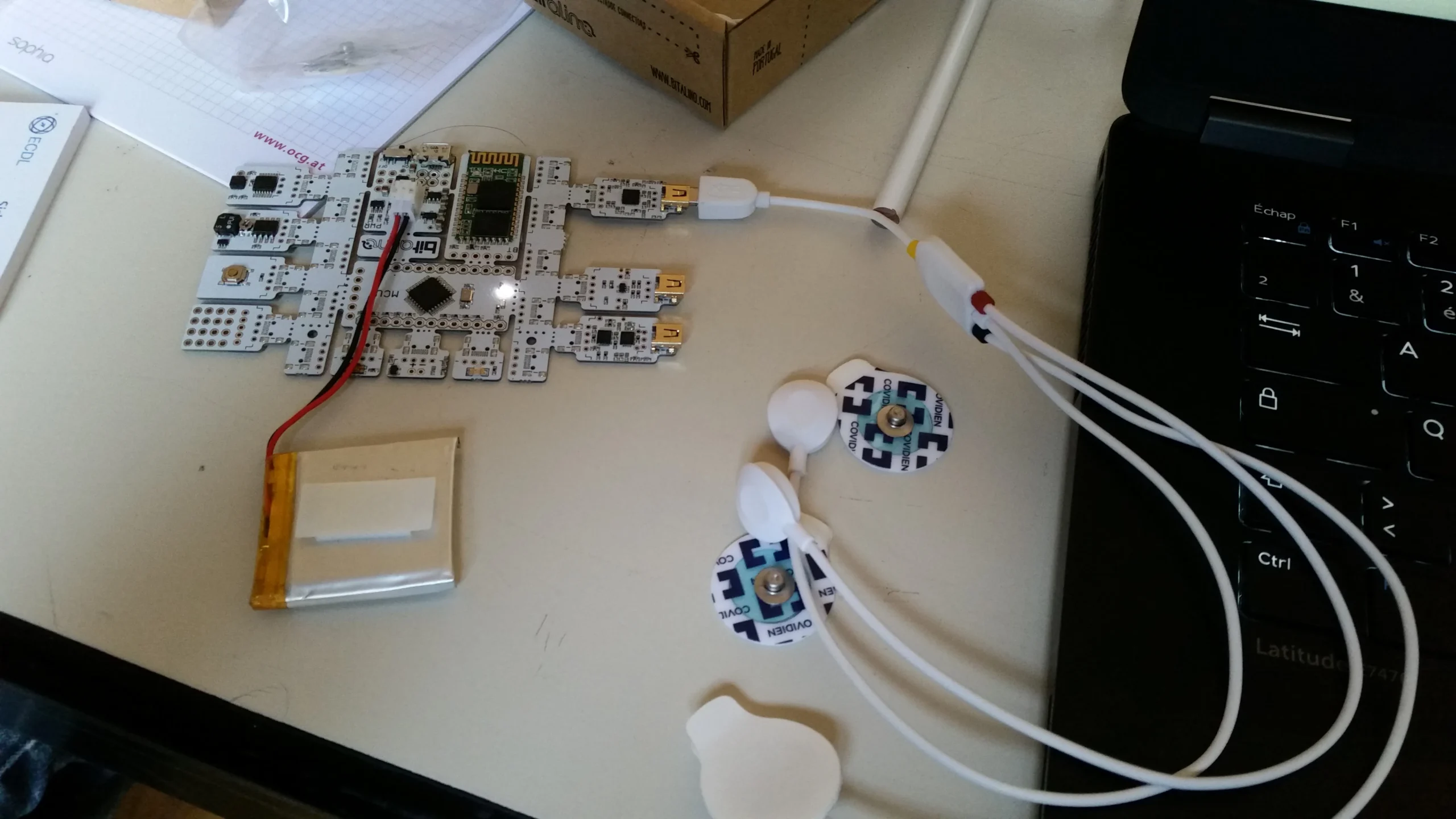

Bitalino

Several presentations gave me ideas, but Bitalino got my attention thanks to the many sensors readily available for developers, linked together to a modular board.

My first idea, the most simple, was to be able to control a Deezer player from some gestures. This would be not only an original feature but also a way for disabled persons to use Deezer more easily.

According to the remaining time, I had also the idea to capture more emotional feedback from my body and inject it to our recommendation algorithm. This would allow music recommendation algorithms to rely on one more source of data: the physical state of the listener. Being in your bed in the dark or running in a light day could influence recommendation although the user had no changes in his/her tastes or listening history.

As Bitalino was new to me, I spent some time in the morning exploring its capabilities and decided to go first to a body controller for the Deezer player.

Capturing gestures in Python

I started from a given example on bitalino.com: MuscleBIT. This code is a good start to get values from the Electromyography (EMG) sensor. At this stage, the steps are to:

- Link Bitalino to some power (battery or USB)

- Install pybluez to communicate with the device in Bluetooth (with pip install…)

- Switch it on with the little switch on the board

- Connect it to the computer as a Bluetooth device (code is 1234)

- Get its mac address (written on the back of the board) to paste it into the code as it is the way to find the device from the Bitalino python library (included in the example)

macAddress= “20:13:05:15:37:61”

SamplingRate = 1000

device = BITalino.BITalino()

device.open(macAddress, SamplingRate = SamplingRate)

The code is basically an infinite loop reading the device input, normalizing it and comparing it to a threshold to avoid noise. If the normalized value is higher than the threshold then, in this example, the LED switches on as well as the buzzer. I kept this feature, but also used the given signal for my own needs.

Transferring the signal to a web page

Playing with the EMG sensor, I was surprised to see that it was quite stable and pretty easy to control. Noise is very low: if I walk around or grab and carry things, it won’t send a signal at all. Noise is very problematic when it comes to neural sensors, I heard. Snapping my fingers or closing my fist would instantly send a signal, and keep it active until I released my muscle. And it’s very reactive, too!

Now that I could capture my muscle activity, I wanted to send it to a web page. The reason is that I wanted my final app to be a Deezer Web App, as showcased on this page. This would demonstrate better, I thought, an alternative way to control the actual player.

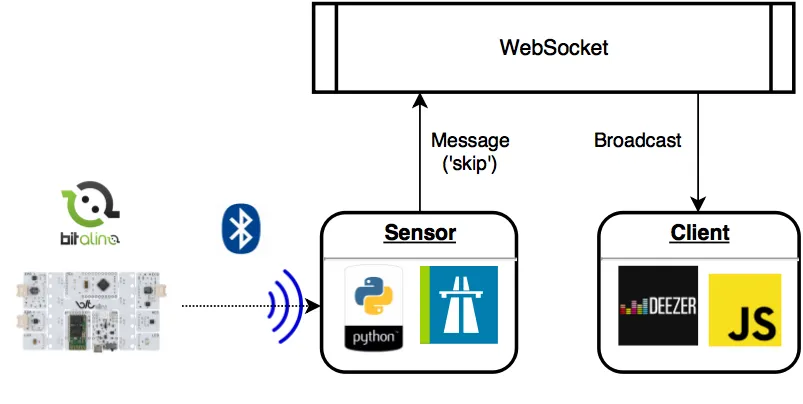

Websocket

To communicate between my back-end and the player, I set up a Websocket. Each time a signal was read from the sensor, the python script would instantly inform the web page through the opened socket.

However, as my python script would be too busy listening to the sensor activity (remember, the infinite loop), the socket had to be in another script, because it is also an infinite loop! I called this script server and used the Autobahn|python package, which is, among other things, an open-source implementation of the Websocket protocol. This example gave me what I needed. A socket is created on server side, the sensor script is connecting to this socket as well as the web page. The Websocket receives events from the sensor script, and broadcasts it to the web page.

The server creates the Websocket and listens:

factory = ServerFactory(u"ws://127.0.0.1:9000")

factory.protocol = BroadcastServerProtocol

listenWS(factory)# When the wocket receive a message, it is broadcasted to

# all registered clients

for c in self.clients:

c.sendPreparedMessage(preparedMsg)

The JavaScript clients connects the socket and controls the Deezer player accordingly:

wsuri = "ws://localhost:9000";

sock = new WebSocket(wsuri);

sock.onmessage = function(e) {

switch(e.data) {

case "skip":

DZ.player.next();

break;

case "volume":

if (DZ.player.getVolume() == 30) {

DZ.player.setVolume(100);

} else {

DZ.player.setVolume(30);

}

break;

case "stop":

if (DZ.player.isPlaying()) {

DZ.player.pause();

} else {

DZ.player.play();

}

break;

default:

console.log("unknown message " + e.data);

break;

}

}

Setting minimal commands

I rapidly created a primal set of commands:

- 1 contraction = play next track

- 2 contractions = reduce and restore volume

- 3 contractions = stop and resume player

This is interpreted in the sensor script which basically mesures the number of contractions during three seconds from the first contraction. It counts them, and sends a textual message to the socket which will rebroadcast it.

# Increase the number of contractions (beeps) within same message

if time.time() - last_signal > 0.2:

last_signal = time.time()

beeps += 1...# Count number or beeps and send messages

time_dif = time.time() - last_signal

if 3.0 > time_dif > 1.0:

if beeps == 1:

ws.send("skip")

elif beeps == 2:

ws.send("volume")

elif beeps == 3:

ws.send("stop")

Going further: a full integrated JS app

Of course this architecture is far from suitable, and is a result of a quick-and-dirty hackathon situation. The main caveat is that if a new client comes to the Websocket, it will receive messages broadcasted from the same Bitalino device. One person will control all the players!

One neat solution that I had to drop because of a lack of time (the hackathon was 8 hours long after all), is to integrate all this code into one JS application. There is a Bluetooth capacity in web browser, which must be explicitly activated by the user (a flag in Chrome for instance). From there, the user could select the Bitalino device around from the web page, and the JS could receive directly the Bitalino signals, and perform adequate controls to the Deezer player.

That could be a cool revamp of this application.

Going further: capturing emotions

When talking to people from IRCAM at this hackathon, and from the presentation about the Bitalino the day before, another sensor caught my attention : the Electro Dermal Activity (EDA) sensor.

From this data, I could imagine how to use it as an input to our recommendation algorithm and have an impact on the Flow we are listening. Not that I want to use this sensor as the main element of music recommendation, but to add it to the current ones we manage today, and maybe to others from our body we could add later with the Bitalino. The more information we get from the listener, the better we can recommend him/her.

Of course, I hope to make this work evolve!