Earlier this year we organized our in-house Hackathon at Deezer. One of the themes was “More Emotional Engagement”. We decided to get together and work on a real-time music visualizer (think Winamp). The idea was to display an animation that would react to the music currently playing in the Deezer app.

We required a very flexible framework and playground in order to make original animations, so we resolved to create a visualization directly on the GPU using shaders. We also included a bonus: they could run on Android, in web browsers with WebGL or any platform compatible with OpenGL.

Extracting useful information from the audio

The first thing was to identify the information that needed to be extracted from the track. There were several options: we could obtain the current power/volume from the audio signal (think RMS) or provoke a reaction if a high-pitched sound was emitted. The main tool used to work on frequencies using a signal is called a Fourier transform. The method is to apply a FFT (Fast Fourier Transform) algorithm on the audio signal.

There are a few ways to do that on iOS. We chose to use AVAudioEngine, then tapped on the output buffer and finally applied the FFT with the help of the Accelerate framework.

But before that we needed to initialize an instance of AVAudioEngine with an AVAudioPlayerNode. You can see the process in the following code extract.

Following that step we needed to get the audio buffer (an array of float) to perform the FFT. In order to do so, we used the method installTap to observe the output of the player node.

Now the fun part: performing the FFT on iOS. As I said earlier, in order to succeed we recommend you use the Accelerate framework. You can see a Swift implementation below.

Transferring the FFT to the GPU

Now that we had the FFT output we wanted to explore what we could do achieve.

The idea is to create the visualization with a shader firstly, because it’s fast and secondly because it’s fun. You can look at what people are doing on Shadertoy. It’s inspiring.

Creating the OpenGL view

We decided to go with OpenGL as it’s supported by almost every mobile platform. We could have attempted the same thing with Metal but we would have lost the cross-platform ability that OpenGL shaders offer.

We needed to show GLKViewController and then display a rectangle with a GLSL shader program attached to it.

So the idea is to display two triangles. Here is the data we sent to the GPU below:

We then created the buffer objects to display everything we needed. You can check out the process here. It’s still a bit cumbersome to work with C methods in swift — especially when there are pointers involved, but all in all we still managed to get the desired result.

Passing the data to the GPU

The tricky part is generally to transfer the FFT output data to the GPU as a GPU is mainly used to handle graphics. The way to do it is to transfer a texture but instead of receiving an image you obtain the FFT data stored in the pixels. You can see how we store the float array into a texture below:

The visualizations

Now that we had everything sorted on the GPU side, we needed to create the visualization. If you’re familiar with GLSL, it’s quite a simple task: you just write a fragment shader that uses the FFT output data as a texture as well as a few uniforms (elapsed time, mean value of the audio signal, etc.)

For a quick introduction to GLSL shader programming you can read the very good Book of Shaders.

Here is a simple shader that only displays the FFT data as a texture in grayscale.

This was all great but we needed to go a step further. We could compute the RMS (Root Mean Square) of the audio signal to have one single value representing the current audio level. In our case we turned it into a uniform variable for the fragment shader. We call it u_rms (cf. the code snippet below).

Just for fun we did a few more…

Next steps for this hackathon project?

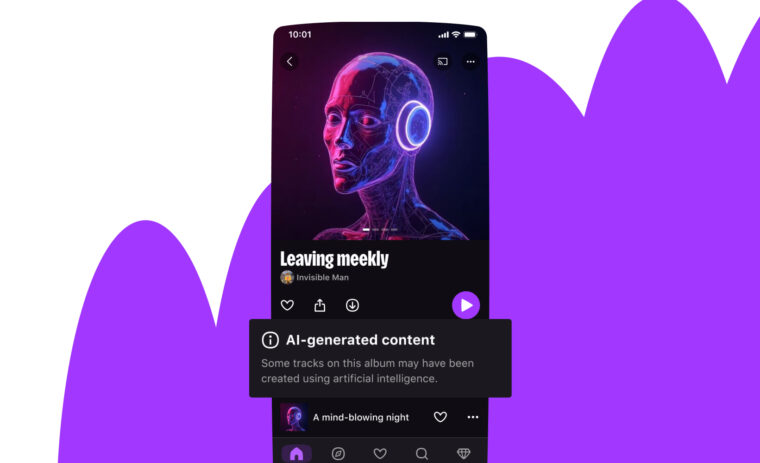

We’re always exploring new ways to improve your listening experience. We believe a clever visualization is a good way to accomplish this. This experiment will be integrated into the app one way or another, whether it’s in the Deezer mobile app ‘Labs’ or in the actual player.

By the way

You can follow some of the tech events we host on our dedicated DeezerTech meet up group and discover our latest career opportunities on jobs.deezer.com. We’re currently looking for several Senior and Lead iOS engineers to join our team.