Music listening context such as location or activity has been shown to greatly influence the

users’ musical tastes. In this work, we study the relationship between user context and audio

content in order to enable context-aware music recommendation agnostic to user data.

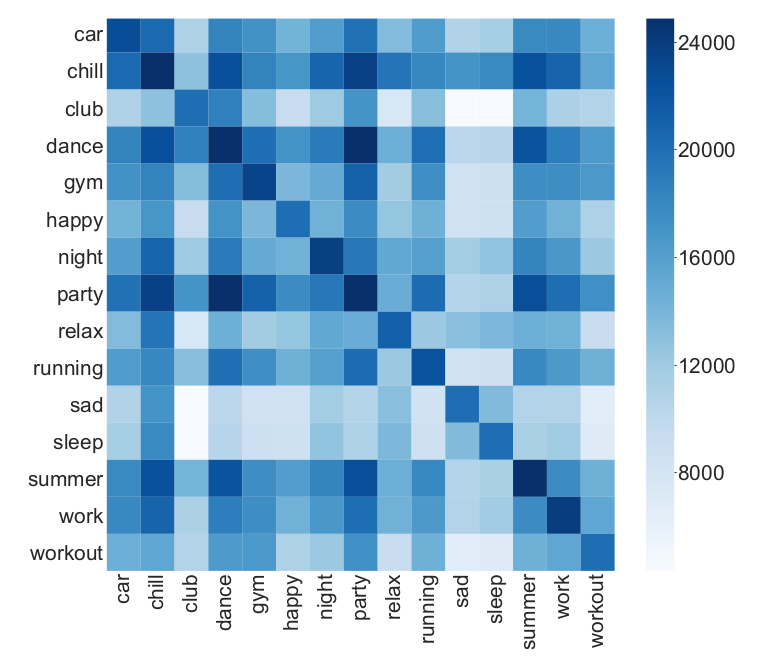

For that, we propose a semi-automatic procedure to collect track sets which leverages playlist

titles as a proxy for context labelling. Using this, we create and release a dataset of approximately

50k tracks labelled with 15 different contexts.

Then, we present benchmark classification results on the created dataset using an audio auto-tagging

model. As the training and evaluation of these models are impacted by missing negative labels due to

incomplete annotations, we propose a sample-level weighted cross entropy loss to account for the

confidence in missing labels and show improved context prediction results.

This paper has been published in the proceedings of the 45th IEEE International Conference

on Acoustics, Speech and Signal Processing (ICASSP 2020).