Graphs have become ubiquitous, due to the proliferation of data representing relationships or

interactions among entities. Extracting relevant information from these entities, called the nodes of the graph,

is crucial to effectively tackle numerous machine learning tasks, such as link prediction or node clustering.

While traditional approaches mainly focused on hand-engineered features, significant improvements were recently achieved

by methods aiming at directly learning node representations that summarize the graph structure.

In a nutshell, these representation learning methods aim at embedding nodes as vectors in a

low-dimensional vector space in which nodes with structural proximity in the graph should be close,

e.g. by leveraging random walk strategies, matrix factorization or graph neural networks.

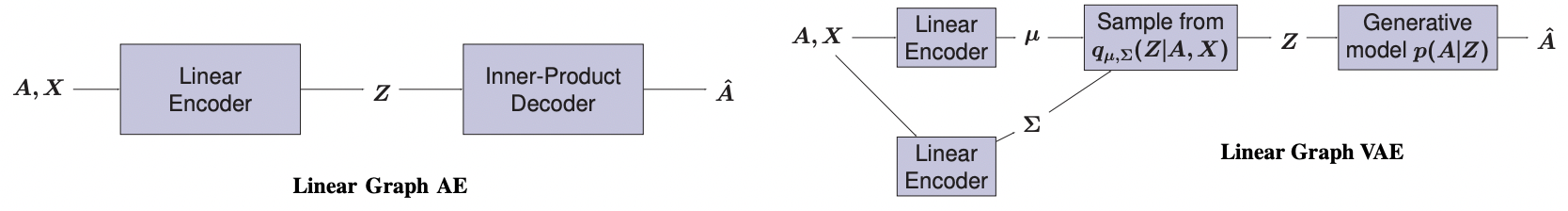

In particular, graph autoencoders (AE) and graph variational autoencoders (VAE) recently emerged as

powerful node embedding methods. Based on encoding-decoding schemes, i.e. on the design of low dimensional vector space

representations of nodes (encoding) from which reconstructing the graph (decoding) should be possible, graph

AE and VAE models have been successfully applied to address several challenging learning tasks, with competitive

results w.r.t. popular baselines. These tasks include link prediction, node clustering, matrix completion for inference and recommendation and molecular graph generation. Existing models usually rely on graph neural networks (GNN) to encode nodes into embeddings.

More precisely, most of them implement graph convolutional networks (GCN) encoders with multiple layers, a model originally introduced by Kipf and Welling (ICLR 2017).

However, despite the prevalent use of GCN encoders in recent literature, the relevance of this design choice has never been thoroughly studied nor challenged.

The actual benefit of incorporating GCNs in graph AE and VAE w.r.t. significantly simpler encoding strategies remains unclear.

In this paper, we propose to tackle this important aspect, showing that GCN-based graph AE and VAE are often unnecessarily complex for numerous applications.

Our work falls into a family of recent efforts questioning the systematic use of complex deep learning methods without clear comparison to less fancy but simpler baselines.

More precisely, our contribution is threefold:

- We introduce and study simpler versions of graph AE and VAE, replacing multi-layer GCN encoders by linear models w.r.t. the direct neighborhood (one-hop) adjacency matrix of the graph, involving a unique weight matrix to tune, fewer operations and no activation function.

- Through an extensive empirical analysis on 17 real-world graphs with various sizes and characteristics, we show that these simplified models consistently reach competitive performances w.r.t. GCN-based graph AE and VAE on link prediction and node clustering tasks. We identify the settings where simple linear encoders appear as an effective alternative to GCNs, and as first relevant baseline to implement before diving into more complex models. We also question the relevance of current benchmark datasets (Cora, Citeseer, Pubmed) commonly used in the literature to evaluate graph AE and VAE.

- We publicly release the code of these experiments, for reproducibility and easier future usages.

This paper has been published in the proceedings of the European Conference on Machine Learning

and Principles and Practice of Knowledge Discovery in

Databases (ECML-PKDD 2020).

Moreover, a preliminary version of this work has also

been presented at the NeurIPS 2019 workshop on Graph Representation Learning.