Voice interactive devices and services have significantly spread over the last four years. Consequently, voice assistants have become smarter and more sophisticated in their ability to handle new and complex demands.

Moreover, voice assistance has also become an available feature in more and more devices. In 2018, Apple announced that there were 500 million devices in the world that used the Siri voice assistant. Not surprisingly, according to Voicebot.ai in 2019, requesting music features were also among the top most common uses for Smart Speakers.

In June 2019 when Apple announced during WWDC19 that Siri would be opened to third-party music providers in the iOS13 version, it was great news for Deezer as it meant that we could offer our users a new way of accessing music.

As a result, our users can now listen to Deezer by navigating the app from their mobile device or Smartwatch, by opening Deezer on their TV and also, if they’re using an iPhone, they can easily activate Deezer just by saying ‘Hey Siri, play my Flow’

Voice Concepts 🎙

Voice User Interface

From a Product perspective, we no longer talk about “UI” — User Interface — but “VUI” for Voice User Interface. This VUI is available on any iOS phone updated with the iOS13 version (or any later version). Once Siri has been enabled into the iOS settings, the user can unlock their phone by saying the wake-up-word ‘Hey Siri’ or just by pressing the Home button.

VUI can change depending on the device. As a result, the way a user interacts with it is dependent on whether the voice assistant is integrated into a watch, Speaker, or TV. For example, to interact with Siri on your Apple Watch, you’ll need to use Siri Raise. Siri Raise means that you can talk to Siri by raising your wrist and speaking into your Apple Watch.

For now, Deezer is usable through Siri with a mobile device only📱.

Voice User Experience

The experience is defined by the scope of intents that the voice assistant understands.

Following Siri’s scope of comprehension defined for third-party music providers, we started a POC — proof of concept — to begin our work with ‘must-have’ intents: songs, albums, playlists, artists, mixes. We released the POC version in December 2019 to enable users to test the new integration at once and quickly get their feedback.

A few weeks later, in January 2020, we added the last two missing intents: podcasts and live radios.

Therefore Deezer now offers its full content through voice assistants. Users just need to make sure that they call “on Deezer” for each of their requests:

‘Play my Flow on Deezer’

‘Play Beyonce on Deezer’

‘Play my Sunday mood playlist on Deezer’

‘Play the TED talks daily on Deezer’

Users can also control the player by voice by saying ‘Pause’, ‘Resume’, ‘Volume up’, ‘Volume down’, ‘I like this song’, ‘I don’t like this song’, ‘Turn off Shuffle’, etc.

If you’re interested, all the other commands are accessible here.

How Voice Assistants work

Siri is not the first voice assistant Deezer has been included on. Since 2018, Deezer has also been available on both Google Home and Alexa. Even though all assistants work in a similar manner, for each voice assistant that we partner with, we develop a custom integration plan that specifically aligns with that architecture of that partner’s device.

A Voice Assistant is an algorithm

Each voice assistant or Artificial Intelligence is made of an algorithm of language comprehension. As any child learning to speak, the more he reads, the more he gets used to words and the associated ideas behind those words. This is why an AI without any data is like a baby starting to speak. Exponentially, the AI will get smarter and reach the level of comprehension of a 7 years old child within a few weeks.

The algorithm will scan hundreds of words that are referred to as entities in the machine learning world. In the case of Deezer, we want the algorithm to learn all song titles, artists, albums, playlists, etc. We have a basis of more than 55M entities, and a delta of new releases added every Friday.

Once the algorithm has seen the full catalog, it will be able to recognize all the entities when someone asks for them.

In addition, we also expose the algorithm to some actions like ‘Play’, ‘Pause’ or ‘Resume’, so that the AI also learns to detect the action to perform when the entity is found. This intention and entity recognition is called ‘Natural Language Understanding/Processing’, “NLU” or “NLP” for our Data Scientists friends.

A Voice Assistant ranks entities

So now, if I say ‘Hey Siri, play Imagine by John Lennon on Deezer,’ what happens? The NLU engine will get the action ‘play’, the track’s title ‘Imagine’ and the artist name ‘John Lennon’. Then it will simply get the corresponding track with this song’s name and artist from our catalog and play it.

For each entity, we also share metadata (the genre of a track, its popularity rank, its ID, etc.) so that it’s easier for the search engine to find the right entity.

Why is Siri different from other Voice Assistants?

For voice assistants such as Google Home and Alexa, the entity recognition and search engine within the Deezer catalog are managed respectively on Google’s and Amazon’s side. Consequently, Google and Amazon trigger the entity with the highest popularity rank provided by our catalog and directly play the top one entity.

For Siri, this is slightly different. The two matched entities: ‘Imagine’ and ‘John Lennon’ will be given to Deezer as ‘raw data’. Then it’s up to Deezer to find the right entity. For Siri, we are using the search engine of our iOS mobile application.

Another option for us would have been to develop a new search endpoint dedicated to Siri use cases. However, after assessing this solution, we realized that time and work it would require would not outweigh the endpoint’s gain in terms of the performance and feature improvements that it would allow.

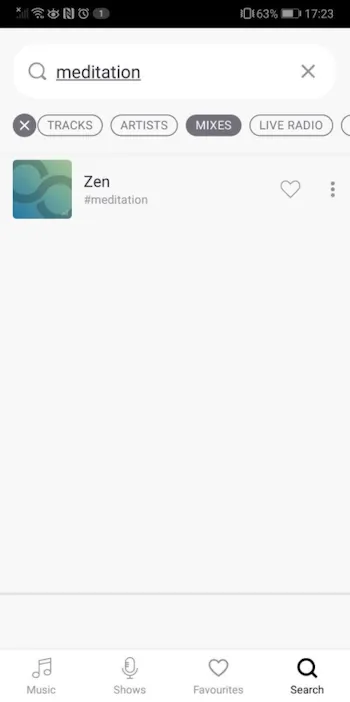

In order to boost our search results, we shared some vocabulary with Siri so that the Siri NLP algorithm would learn Deezer’s specific content such as moods, genres or users’ playlist names. For example, if you ask Siri to ‘Play some music to fall asleep’, Siri will return ‘moodNames:(meditation)’.

On Deezer’s side, if you search for ‘meditation’ as a mood, we will return the Zen mix. The user who asked for music to fall asleep will, therefore, be played the Zen mix.

We tested all the genres and moods available on Deezer in order to map them with genres and moods available and understood by Siri.

If you wish to know more, the technical integration of Siri into Deezer is explained in another post.

Key Learnings

As voice assistants are available with more and more devices, usage is continuing to grow and users are getting used to this new way of interacting with their devices. Our success rate (i.e. the rate of requests that returned a content streamed) on Siri is high thanks to the exemplary level of comprehension of our AI and the efficiency of our search engine.

We realized that the most used commands are the simplest ones like: ‘Play some music’, ‘Play my Flow’. In comparison, other phrases like ‘I like this track’ or ‘I don’t like this track’ are not often used. Yet, in particular, Deezer Flow is well suited for voice usage as it allows users to get a mix customized to their music taste with a single voice command.

At Deezer, we are proud of this voice integration and we hope that you will check it out (if you haven’t already), and enjoy it as much as we do! We are currently looking forward to expanding this new system to other devices.

Thank you for reading!