Optimizing Kubernetes resources with Horizontal Pod Autoscaling via Custom Metrics and the Prometheus Adapter

co-authored by Denis GERMAIN

If you’ve lived in a cave for the last 10 years and have never heard of Kubernetes, we’ll be brief and say that it’s an extensible open-source platform that automates the deployment, scaling, and management of containerized applications.

Its popularity stems from its ability to provide native scalability for your apps, portability across different environments (on-prem, cloud, hybrid), and robust features like self-healing and rolling updates. Supported by a vibrant community (and a foundation, the CNCF), Kubernetes has become the de facto standard for application management in a containerized ecosystem.

At Deezer, Kubernetes has been deployed and used in production since 2018 in a bare-metal environment. We also have some clusters on the public cloud.

Over the years, we (and we are not the only ones) have come to realize that scaling applications to the right size and the right numbers of replicas is hard. Hopefully, Kubernetes can help us with that.

Ready to scale? Let’s go!

Understanding Horizontal Pod Autoscaling (HPA)

HPA is a key feature in Kubernetes. It allows you to specify targets for some representative metrics (like CPU consumption of a Pod) to determine automatically whether Kubernetes should automatically scale up (or down) your app.

Like everything in Kubernetes, it consists of an API (currently autoscaling/v2). The easiest way to interact with it is to create a YAML manifest, where you describe what the desired state of your application is, depending on the load.

By default, only simple metrics, CPU and memory consumption (collected by metrics-server) are available to the user to specify the scaling rules.

A simple example could look like this:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: myapp-hpa

namespace: mynamespace

spec:

maxReplicas: 6

metrics:

- resource:

name: cpu

target:

averageUtilization: 50

type: Utilization

type: Resource

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myapp

Note: HPA specs can be incredibly complex and powerful as the API has been significantly enriched over the years. Please read the official documentation to learn more about this.

Based on the previous example, once this manifest is applied, Kubernetes will try to maintain no more than 50% average CPU usage on all the myapp Pods, and will add replicas if the average CPU consumption goes above this threshold. As soon as the CPU goes below the target, Kubernetes will scale down.

But while HPA using CPU and memory metrics is effective for many scenarios, it has limitations:

- Modern applications often have complex performance characteristics that are not fully captured by CPU and memory usage alone. For example, an application might be I/O-bound. Other factors like request latency, custom business metrics, or external service dependencies might provide a better basis for scaling decisions. CPU usage may also be very high when “booting” a new Pod, which in turn might trigger more scaling than necessary.

- HPA reacts to current metrics, which means there may be a delay between the consumption spike and the scaling response. This can lead to temporary performance degradation. Finding metrics that can anticipate the need to scale rather than react after a degradation can improve reliability.

To address these limitations, Kubernetes allows the use of custom metrics, providing greater flexibility and control over the scaling behavior of applications. This is where tools like Prometheus and Prometheus Adapter come into play, enabling more nuanced and effective autoscaling strategies.

Prometheus and the /-/metrics

Another great project under the CNCF’s umbrella is Prometheus, an open source monitoring and alerting tool. Prometheus features a Timeseries Database optimized for storing infrastructure metrics and a query language allowing easy yet powerful deep dives in those metrics.

Usually, monitoring tools fall under two major categories. Those that receive the metrics from clients who “push” them, and those that periodically “pull” the metrics from the apps themselves. Prometheus uses the pull strategy (most of the time), and by default it pulls the metrics every 30 seconds.

This means that you don’t need to install an “agent” on your apps, BUT that you have to specify to Prometheus a list of “targets” that expose HTTP endpoints (your apps) serving metrics in a specific format, usually on a /-/metrics path:

$ kubectl -n mynamespace port-forward myapp-5584c5c8f8-gbsw8 3000

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

# in another terminal

$ curl localhost:3000/-/metrics/ 2> /dev/null | head

# HELP http_request_duration_seconds duration histogram of http responses labeled with: status_code, method, path

# TYPE http_request_duration_seconds histogram

http_request_duration_seconds_bucket{le="0.0002",status_code="200",method="GET",path="/-/health"} 0

http_request_duration_seconds_bucket{le="0.0005",status_code="200",method="GET",path="/-/health"} 364

[…]

We can then query Prometheus for those metrics by specifying the metric name and adding labels to specify which subset is of interest to us:

http_request_duration_seconds_bucket{kubernetes_namespace=’mynamespace’, kubernetes_pod_name=”myapp-5584c5c8f8-gbsw8"}

With Prometheus being the de facto standard when dealing with microservices in containerized environments, we will not give the instructions on how to deploy it here. We will assume you already have a running Prometheus server (or equivalent, as there are compatible alternatives) in your cluster, scrapping metrics from applications.

If you need a starting point, we advise you to take a look at Prometheus Operator, which will greatly speed up the deployment of a complete Prometheus stack.

So, thanks to Prometheus, we now have a bunch of metrics to choose from in order to predict if our apps need to be proactively scaled. The problem is, however, that we can’t tell the HPA to look at these metrics directly, as the HPA is not directly compatible with Prometheus PromQL query language.

Prometheus Adapter to the rescue

We need another tool that will get the metrics out of Prometheus and feed them to Kubernetes. You’ll have guessed which software this is by now: Prometheus Adapter.

Installing this software is quite straightforward — you can install it from a Helm chart hosted in the repository.

$ helm show values prometheus-community/prometheus-adapter > values.yaml

$ helm install -n monitoring prometheus-adapter prometheus-community/prometheus-adapter -f values.yaml

$ kubectl -n monitoring get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

metrics-server 2/2 2 2 1d

prom-operator-kube-state-metrics 1/1 1 1 1d

prom-operator-operator 1/1 1 1 1d

prometheus-adapter 1/1 1 1 1d

thanos-prom-operator-query 3/3 3 3 1d

In this example, you can see that we already deployed metrics server and Prometheus using Prometheus Operator, which we mentioned earlier, and that Prometheus Adapter is running.

By default, the Prometheus Adapter will be deployed with some custom metrics that we can use out of the box to scale our apps more precisely. But in this article, we will show you how to create ✨ your own ✨.

Configuring Prometheus Adapter to Expose Custom Metrics through the API Server

At the start, Prometheus Adapter’s configuration doesn’t contain any rules. It means that no custom metrics are exposed through the API server at first, and HPA can’t use any custom metrics.

Prometheus Adapter acts in this order:

- Discover metrics by contacting Prometheus,

- Associate them with Kubernetes resources (namespace, Pod, etc.),

- Check how it needs to expose them (if necessary, it can rename metrics),

- Check how it should query Prometheus to get the actual numbers, (ex. to get a rate).

First, we need to validate the custom metrics API on our cluster. Resources should be empty, but it proves the custom.metrics.k8s.io/v1beta1 API is accessible.

└─[$] kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1" | jq

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"resources": []

}

It then takes several steps for Prometheus Adapter to collect and provide metrics to the Kubernetes API server. The entire Prometheus Adapter configuration can be tuned through the values.yaml file of the helm release.

The first thing to configure here is “where” Prometheus Adapter can find Prometheus. If your Prometheus Adapter is running on the same cluster as your Prometheus stack, you can use a Kubernetes DNS record like below (see Kubernetes documentation DNS for Services and Pods). Otherwise, you can specify an IP address (or DNS name) and a port number.

For that, edit the values.yaml with your own technical context:

[...]

prometheus:

url: https://thanos-prom-operator-query.monitoring.svc

port: 9090

path: ""

[...]

In order to verify that Prometheus Adapter can correctly contact Prometheus, you simply need to check the Pod logs (using kubectl logs pod/prometheus-adapter-abcdefgh-ijklm or any other means at your disposal to read Pod logs).

Once this part is working, we need to add some rules to our Prometheus Adapter. In this example, we are going to use a metric called ELU (for “Event Loop Utilization”) collected from a Node.js server. It is a measure of how much time the Node.js event loop is busy processing events, compared to being idle, and it is more representative of the server load than the mere CPU percentage. You can find more information on this metric here.

Rules let us specify what to query in Prometheus. We can define which labels to import, and override the labels if necessary to match Kubernetes resource names. Here are the most useful values to specify:

- seriesQuery: run the PromQL query, optionally filtered

- resources: associate the time series labels and Kubernetes resources

- name: expose time series with different names than the original ones

- metricsQuery: method to request Prometheus to get a rate (<<.GroupBy>> means “group by Pod” by default)

[...]

rules:

default: false

custom:

- seriesQuery: 'deezer_elu_utilization{kubernetes_namespace!="",kubernetes_pod_name!=""}'

resources:

overrides:

kubernetes_namespace: {resource: "namespace"}

kubernetes_pod_name: {resource: "pod"}

name:

matches: ^deezer_elu_utilization$

as: ""

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>}) by (<<.GroupBy>>)

prometheus:

url: https://thanos-prom-operator-query.monitoring.svc

port: 9090

path: ""

[...]

You should now be able to get some custom metrics with real values by reaching the API server. To test, we will use kubectl and the — raw parameter, which gives us more power on the requests we send to the API server.

Here are some examples of commands you can run to manually check that the metrics are correctly exposed through the API server:

# list custom metrics discovery

kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1" | jq

# list custom metrics values for each pod

kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/<namespace_name>/pods/*/deezer_elu_utilization" | jq

Warning: scaling will be strongly dependent on your metricScraping interval and your Prometheus Adapter discovery interval. The official documentation points out that you may run into trouble if you don’t watch out.

> “You’ll need to also make sure your metrics relist interval is at least your Prometheus scrape interval. If it’s less than that, you’ll see metrics periodically appear and disappear from the adapter.”

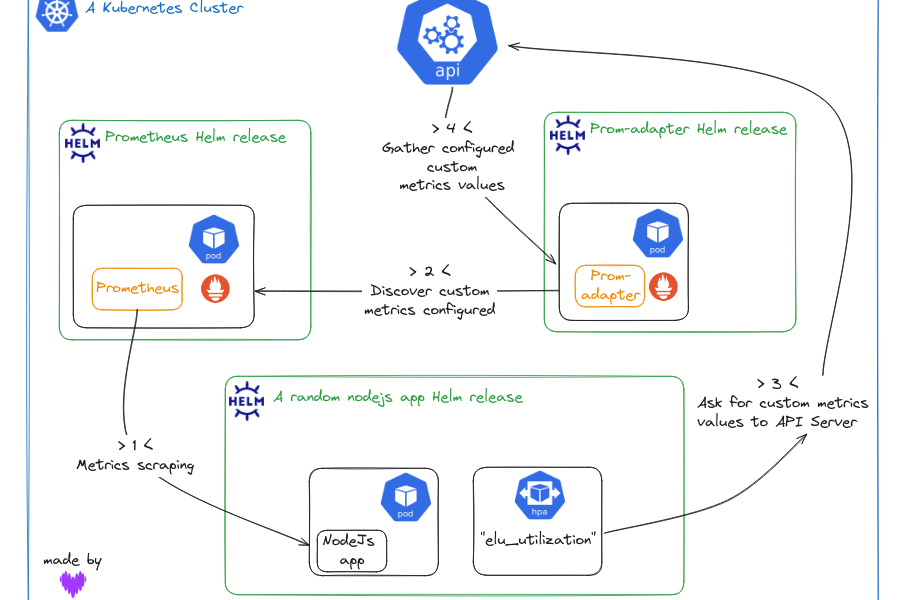

How is it going to work?

So far in this post, we have introduced a bunch of components that interact with each other.

But how is it all going to work under the hood? Well, there’s nothing better than a diagram to explain things like this:

- Prometheus scrapes the metrics exposed by our app

- Prometheus Adapter queries the Prometheus server to collect the specific metrics we defined in its configuration

- HorizontalPodAutoscaler (controller) will query the api-server to periodically check if the ELU metric is within acceptable limits…

- …which will, in turn, ask Prometheus Adapter

Let’s now create our first HorizontalPodAutoscaler!

Using a HorizontalPodAutoscaler resource with custom metrics

At the beginning of this post, we introduced HorizontalPodAutoscaler. The resource itself isn’t difficult to use, but we will dive a little deeper here.

Basically, HPA takes a target deployment to scale, a minimum replica number, a maximum replica number and the metrics to use. For the metrics part, we are now going to use our article’s custom metric, configured with Prometheus Adapter:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

spec:

maxReplicas: 6

metrics:

- pods:

metric:

name: deezer_elu_utilization

target:

averageValue: 500m

type: Utilization

type: Pods

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: <deployment.apps_name>

When configuring an HPA resource, you only set the metric name. But how can the HPA determine the right metric from the right Pods, since several apps may expose this very same metric? To understand this, we can examine the Prometheus Adapter logs.

I0618 12:05:29.149095 1 httplog.go:132] "HTTP" verb="GET" URI="/apis/custom.metrics.k8s.io/v1beta1/namespaces/cmfront/pods/%2A/deezer_elu_utilization?labelSelector=app%3Dstandalone-webviews%2Crelease%3Dstandalone-webviews"

As you can see, labelSelector is added to the query. And as you mention the Deployment in the HPA scaleTargetRef, HPA uses the labelSelector value from the Deployment label selector. It allows you to target the metrics from a specific Deployment. And those labels exist because during Pod scraping with Prometheus Kubernetes Pod discovery and annotations, Prometheus adds Pod labels to the metric labels. If you want to use custom labelSelector on the query, add the “metrics.pods.metric.selector” field to the HPA resource.

So, we have the custom metrics API, we have configured the Prometheus Adapter to discover and expose some metrics through it, and we have created our first HPA resource. Now it’s time to stress test the deployment and observe the behavior.

To do so, we’ll introduce you to a tool named Vegeta (it’s over 9000!)

> Vegeta is a versatile HTTP load testing tool built out of a need to drill HTTP services with a constant request rate.

We’ll use Vegeta to generate load on our application while watching the app’s Pods and the HPA state.

kubectl get hpa/<myhpa> -w -n <mynamespace>

kubectl get po -l app=<myapp> -w -n <mynamespace>

vegeta attack <app_endpoint_http>

(you can also watch events with kubectl get ev -w -n <mynamespace>)

Note: you should use 3 separate terminals to achieve that 😛.

In case your application can handle a huge load and default parameters don’t trigger scaling, you can change some parameters in the Vegeta command. We recommend using workers and rate:

- -workers: Initial number of workers (default 10)

- -rate: Number of requests per time unit [0 = infinity] (default 50/1s)

When load increases, your custom metric value will increase too, which should, in turn, trigger a Deployment scale up once the thresholds are met.

Using Prometheus Adapter in production

As Prometheus Adapter becomes a core component of your architecture, tuning and monitoring it is essential. When HPA is down, there are two potential impacts: you might be using too many resources for the current traffic, or you might not have enough resources to handle the current traffic. In particular, if the Prometheus Adapter Pod fails, your workloads are unaffected immediately; they maintain the last desired replica count calculated by HPA before the adapter went down.

To prevent this from becoming a single point of failure, make sure to scale Prometheus Adapter’s replicas to a fixed value greater than one, avoiding the default of a single replica. Additionally, you can implement a PodDisruptionBudget to ensure the HPA functionality is not lost during cluster maintenance.

For effective monitoring, consider using a PodMonitor object if you’re using Prometheus Operator, or leverage Kubernetes service discovery from Prometheus to generate basic metrics on your Prometheus Adapter stack. This allows you to create a Grafana dashboard with critical information. Alternatively, add a probe on the custom metrics API to analyze its output. If you encounter the “Error from server (ServiceUnavailable): the server is currently unable to handle the request” message, it indicates that no Prometheus Adapter Pods are available to serve the API, prompting immediate action.

By following these steps, you will ensure that your autoscaling infrastructure remains robust and reliable, preventing resource mismanagement and maintaining optimal application performance.

Conclusion

Horizontal Pod Autoscaling is the standard built-in mechanism in Kubernetes for scaling Deployments, helping your applications handle varying loads efficiently.

While traditional HPA uses CPU and memory metrics, we’ve shown you how to integrate custom metrics with Prometheus Adapter to enhance this capability, allowing for more precise and relevant scaling decisions.

Setting up Prometheus and Prometheus Adapter is straightforward and provides significant benefits in terms of flexibility and resource utilization, but configuring it can be a little counterintuitive.

If HPA with (or without) custom metrics is enough for you, great! For more complex autoscaling scenarios, consider exploring KEDA (Kubernetes Event-Driven Autoscaling), another open source project that extends the capabilities of HPA by supporting various event sources and scaling triggers.

Happy scaling! 😁

Additional sources

Prometheus Adapter documentation:

- General walkthrough

- Configuration

- https://github.com/kubernetes-sigs/prometheus-adapter/blob/master/docs/config.md

Kubernetes HPA documentation:

Other: