In this article, we will share our experience with a tricky situation we found ourselves in a few months ago. It involves Kubernetes clusters, CA certificates, Hashicorp Vault, and transparent operations in production for millions of users.

So buckle up; it might be a bumpy ride!

The hard way yes, but automated!

At Deezer, Kubernetes has been deployed and used in production for more than three years. Today, there are tons of different ways to deploy Kubernetes. Each has its advantages, depending on your cluster topology and needs (cloud, baremetal, edge, etc.).

You may have guessed it: this article’s title refers to the famous “Kubernetes the hard way” written by Kelsey Hightower.

That’s because back in 2017, our clusters have been deployed using Ansible playbooks, written by hand from the documentation. And this looks a bit like automating “Kubernetes the hard way” with Ansible 😉.

Though you would (and should) not do it like this today, you have to put yourself in the context of 3+ years ago. At that time, in terms of deployment method, the options were more limited than they are today, especially for bare metal installations (for example, kubeadm, now GA, was still displaying big red warnings of being “NOT PRODUCTION READY”).

It’s the final countdown

Not all Deezer applications are running inside Kubernetes, but a significant enough portion of them do. Should our Kubernetes platform be down, Deezer end users would start noticing that something is up (see what I did here? 😉). And that’s where our story begins:

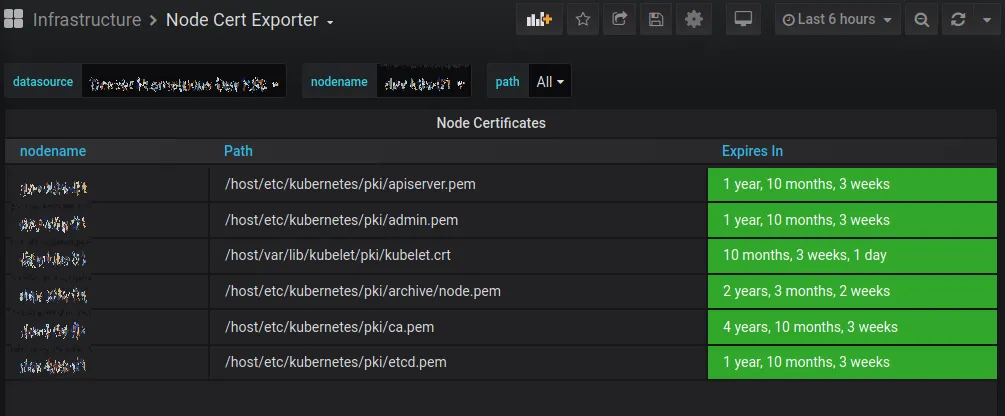

The Certificate Authority (CA) of Kubernetes was about to expire in a few months, and with it, the whole certificate chain.

To put more graphically, instead of reassuring green indicators everywhere, we had a lot of orange warnings.

Since all communications inside the Kubernetes cluster are encrypted and authenticated with this CA, letting it expire would be VERY bad. You would essentially lose all access to your cluster and the applications within 😱. If you search a little on the Internet, you may find horror stories from people who let their cluster’s CA expire (see at the end of the article).

So, we had to find a way to renew the certificate, without any interruption for end-users, before the expiration date.

Why is it a problem?

With the CA certificate involved with all internal Kubernetes components, renewing is not a trivial task. To make things worse, the official documentation is incomplete on this matter, and external documentation on this topic is relatively thin on the Internet.

There are multiple reasons for that. Many organizations use Kubernetes through a managed offer from their cloud provider, who hides the complexity of running it from them. In this case, providers worry about this for them (but do they really?).

Among the remaining Kubernetes users, most of those running on-prem clusters like us use tools like kubespray or kubeadm, which contain some sort of certificate renewal procedures but were not available at that time.

Then, on the very thin crowd that remains, regenerating CA by hand is not something that has to be done often. CAs are often generated with 3 to 10 years expiration dates (best practices dictate shorter periods). If you compare this to the relatively recent adoption of Kubernetes (a project initially released six years ago), you can assume that many CAs have yet to expire!

How are we going to play this?

We had to find out how to change Kubernetes CA on the fly with no end-user downtime. Luckily for everyone, if you plan it carefully, it should be possible for many workloads.

The first thing to know is that, even if the cluster is unresponsive while the certificates are being renewed, the applications in Kubernetes (Pods) that are already deployed and running will try to continue to run. However, new Jobs won’t be scheduled, crashing Pods or workloads on failed nodes won’t be restarted.

The second thing to know is that all Pods run with a security context given by a ServiceAccount. If you don’t specify a ServiceAccount in your application’s manifest, you’ll get the default one in the Namespace.

What’s really important is that the Pod is granted access to the cluster according to the identity it was given (the ServiceAccount), which is authenticated with a token. That token is generated using the Kubernetes Controller Manager. Once we renew the CA, all tokens are toast. You must regenerate them all and restart the Pods.

So, the goal is to restart what’s required — nothing more — in the right order, then, renew all the tokens. All this quickly enough so that no application or node fails in the meantime.

Easy peasy

BUT!

There are, of course, exceptions or else that would be too easy:

- Applications that need to communicate with the Kubernetes API will be disrupted until they are restarted completely (once they have their new token). This probably includes your monitoring (Prometheus), which scrapes your cluster’s API. You’ll fly blind for a few minutes.

- Applications maintaining long network connections open (like websockets) will probably be cut at some point because the IngressController you are using most likely requires access to the API server (see the previous point). If you’ve implemented a proper retry mechanism, that may not be a big issue.

- All applications will have to be restarted at some point. For apps with no replicas, a little downtime will be unavoidable. To work around this, always try to have multiple replicas for all your applications. If that’s technically impossible, restarting these applications may not be urgent, so that you may plan this later on.

Now that you know everything, let’s do it 😊.

Sooooo let’s renew a CA in Kubernetes with (nearly) no downtime

Before doing anything, we need to be able to roll back everything if necessary. This means backups and tested procedures to restore them.

Backup every certificate you currently use in /etc/kubernetes, but also in /var/lib/kubelet if there are any, and those of etcd.

CURDATE=`date +"%y%m%d%H%M"`

tar czf /tmp/pkibackup.${CURDATE}.tgz /var/lib/kubelet/pki/kubelet.* /etc/kubernetes

You might also want to backup all the Kubernetes ServiceAccount tokens. These tokens are generated by the Kubernetes Controller Manager and are used by your pods to communicate with the API server (we will talk again about this).

for namespace in $(kubectl get ns --no-headers | awk '{print $1}'); do

for token in $(kubectl get secrets --namespace "$namespace" --field-selector type=kubernetes.io/service-account-token -o name); do

kubectl get $token --namespace "$namespace" -o yaml >> /tmp/token_dump.${CURDATE}.yaml

done

done

Finally, dumping the whole etcd database might also be a good idea (if all else fails)…

ETCDCTL_API=3 etcdctl --cacert=yourca.pem --cert=etcd.pem --key=etcd-key.pem --endpoints 127.0.0.1:2379 snapshot save /tmp/etcd.backup.$(date +'%Y%m%d_%H%M%S')

Using Hashicorp Vault

Historically at Deezer, CA certs for the Kubernetes clusters were generated using standard openssl commands, based on the requirements described in the Kubernetes official documentation.

This works perfectly (official Kubernetes documentation uses cfssl, but that’s the same thing). Unfortunately it’s not really efficient, not super safe (CA keys are stored on disk), and prone to errors.

As most of our secrets are stored in Hashicorp Vault, we decided to take this opportunity to move the certs to Vault (which has its own PKI engine).

I’ll not get into the details to use Vault’s PKI engine, but the idea here is to:

- create a new secret engine

- generate a CA that will be safely stored in Vault

- configure a role that will allow some basic configuration

- generate a bunch of certs with it

vault policy write pki-policy pki-policy.hcl

vault secrets enable -path=pki_k8s pki

vault secrets tune -max-lease-ttl=43800h pki_k8s

vault write pki_k8s/root/generate/internal common_name="kubernetes-ca" ttl=43800h

vault write pki_k8s/roles/kubernetes allowed_domains="kubernetes, default, svc, yourdomain.tld" allow_subdomains=true allow_bare_domains=true max_ttl="43800h"

Certs for everyone!

You get a cert!

And you get a cert!

And you get a cert!(I hope you get the “Oprah meme” as well)

In order to safely automate and deliver the certificates to the Kubernetes nodes, we used consul-template. consul-template is another tool from Hashicorp that you can use to generate configuration files based on templates, with variables (or secrets) found in Consul or Vault.

Basically, the template for a single certificate file will look like this (apiserver-cert.tpl for the API server certificate in this example):

{{- /* apiserver-cert.tpl */ -}}

{{ with secret "pki_k8s/issue/kubernetes" "common_name=kube-apiserver" "alt_names=kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.kubernetes, yourdomain.tld" "ip_sans=100.64.0.1, IP.ADDRESS.MASTER.1, IP.ADDRESS.MASTER.2, IP.ADDRESS.MASTER.3" "exclude_cn_from_sans=true" "ttl=17520h" }}

{{ .Data.certificate }}{{ end}}

The consul-template file will look like that:

template {

source = "/etc/consul.d/templates/ca.pem.tpl"

destination = "/etc/kubernetes/pki/ca.pem"

}

template {

source = "/etc/consul.d/templates/admin-cert.tpl"

destination = "/etc/kubernetes/pki/admin.pem"

}

template {

blah blah blah

...

And we’ll run a command like this one, which will replace all previous certificates with the new ones.

consul-template -config /etc/consul.d/templates/consul-template-config-master.hcl

Note: One issue with this approach is that each call of the consul-template executable will produce a new certificate. For individual certs like node certs, this is fine. But that’s not true for the API server certificate key, which has to be the same on every master node. So, we made an exception for all certificates on the master node: we generated them on only one node then pushed them on all the other master nodes.

Ground control to Major Tom

All the certificates are now refreshed. But not all the Kubernetes components support certificate reload on the fly. We will need to restart them in the right order!

The first thing to do is to restart all etcd servers, roughly at the same time. Once you do this, the API server will be unable to query the Kubernetes cluster’s state. That means you will lose all control on your cluster 😱.

systemctl restart etcd

At this point all future kubectl commands will fail. All Kubernetes functions (such as autoscaling, scheduling pods etc.) will stop working. So our priority is to restore this as soon as possible. This can be fixed quickly by restarting the api-server manually (or killing them with a SIGKILL if they are Kubernetes Pods and not systemd units).

We can then start to properly restart the control plane components that rely on etcd or the API server.

Start with the CNI (like flannel for example), delete the kubelet certificate/key pair and restart them on all nodes (which will automatically regenerate it).

Note: kubelet certificates location may depend on your setup, but it usually can be found in /var/lib/kubelet/pki/ on all the nodes.

rm /var/lib/kubelet/pki/kubelet.{crt,key}

systemctl restart kubelet

Finally, we can restart all remaining Kubernetes control plane components using kubectl commands from the master node themselves.

/usr/bin/kubectl --namespace kube-system delete pods --selector component=kube-apiserver

/usr/bin/kubectl --namespace kube-system delete pods --selector component=kube-controller-manager

/usr/bin/kubectl --namespace kube-system delete pods --selector component=kube-scheduler

Our control plane is up again!

The tokens sleep tonight

Now that the control plane runs with the new certificates, if you look at the API server logs, you’ll see lots of cryptic error messages about wrong tokens.

We have to refresh all the tokens stored in the cluster and that will be done automatically by the Kubernetes Controller Manager once we clear the Secrets. This loop will parse all the Namespaces, find all Secrets of type service-account-token and will patch the secret to remove the token from them.

for ns in `kubectl get ns | grep Active | awk '{ print $1 }'`; do

for token in `/usr/bin/kubectl get secrets --namespace $ns --field-selector type=kubernetes.io/service-account-token -o name`; do

/usr/bin/kubectl get $token --namespace $ns -o yaml | /bin/sed '/token: /d' | /usr/bin/kubectl replace -f - ;

done

done

We can now move on to restarting applications, with priority to those that really need access to the API server.

Now, the applications

Restart coredns first (or kubedns, depending on your setup). Since the certs have been renewed, all internal Kubernetes name resolution should be failing, which will soon be problematic.

/usr/bin/kubectl --namespace kube-system rollout restart deployment.apps/coredns

But you should also restart every app that seems important to the function of the cluster, including (but not limited to):

- kube-proxy

- prometheus

- Ingress controllers

- any monitoring app

Finally, restart all applications that need API access after that. All applications that don’t need access to the API server can be restarted when you have the time.

Your Kubernetes CA certificate is now renewed; you can relax for a few more months 😊.

Wrapping this up

Renewing Kubernetes CA certificates is not a trivial task but we’ve learned that it could be done in most cases, with careful planning.

We automated all the tasks described in this article in Ansible roles and played them on staging environments until we were ready to roll it out.

Last December, we planned this intervention in all our clusters. They were all updated in a few minutes with no observed downtime on our services visible to end-users, and no more disruption than expected on applications that required access to the API server.

I love it when a plan comes together

Sources

Postmortems on CA certs expirations

Building and breaking Kubernetes clusters on the fly

Kubernetes official documentation on certificates

- Kubernetes — Single Root CA best practices

- Kubernetes — Manual rotation of CA certificates

- Kubeadm — Manual certificate renewal

Hashicorp Vault